Impact Convergence 2016 – Kiwis on the ground in Atlanta

Guest Blogger: Julian King

Impact measurement and evaluation belong together like grits and okra. I learned this (and sampled the southern delicacies) in Atlanta, Georgia on 24-26 October at Impact Convergence (IMPCON) 2016. IMPCON brought together members of Social Value International (SVI) and the American Evaluation Association (AEA) to explore areas of common interest and develop an action agenda to advance the state of the art of impact measurement.

My take-homes from IMPCON are that the world needs a mix of public and, increasingly, private funding to address social disadvantage – and we need to make this funding count by investing in things that actually work. This requires us to collect good evidence – but more than that, we need to think beyond measurement to consider how power is shared, which values matter, and how to use those values systematically to support good decisions and have positive impacts for communities.

To understand what works, we need to measure its impact. We also need the kind of intel that comes from having real conversations with real people. A mix of evidence (numbers and stories) can give us a sound understanding of whether a programme is really changing lives, and how. That’s not all. We also need a way to make sense of the evidence. We need a strong foundation of values or principles to guide us when we look at the evidence and make judgements about how well solutions are working. That’s what evaluation is: making sound judgements based on evidence+values.

Consider a dashboard of indicators. Perhaps it’s for an education program. Some indicators are going up, some are going down. Some are moving a little, and some are moving a lot. Some of the indicators are expressed in dollars and some in other units, e.g., test scores. Some indicators might be more important than others. This panel of indicators is not going to be very much use to you or me unless we have an agreed basis for interpreting the numbers, to answer the big question we’re really trying to answer: how well is our social investment performing? Impact measurement without evaluation is just numbers.

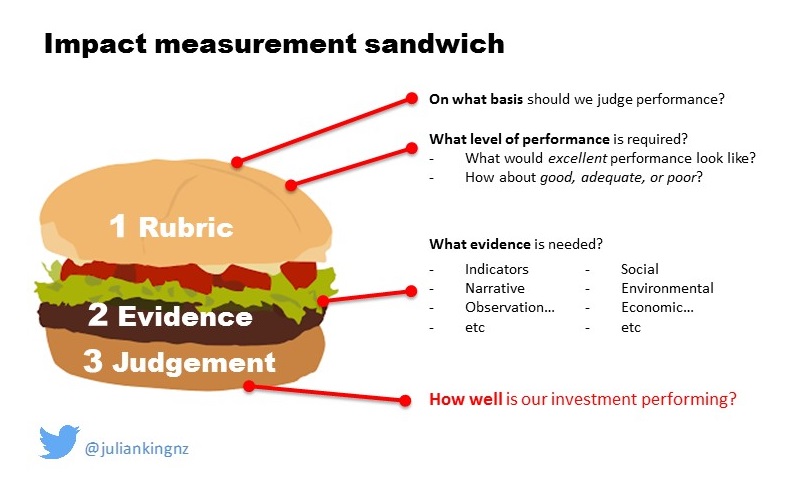

Evaluative thinking sandwiches the evidence. It gives us the filters we need to interpret the numbers and stories to answer the big picture questions. First we need to define good performance so we’ll know it when we see it. Then we can determine what types of credible evidence we need, to ensure we are measuring the right things in the right way. Finally we use our definitions of good performance to make a transparent judgement. This isn’t new. Good impact measurement already uses evaluative thinking – for example, check out this brand new report on impact management.

Evaluation can support good impact measurement by prompting us to properly consider what values matter, and whose values matter. This is about empowering communities. Impact investors, social entrepreneurs, and public funders use evidence to help make decisions. In this process of decision making they retain a high degree of control over what programs are funded and how they are designed. Impact measurement provides evidence that describes how organisations have changed people’s lives – the what’s so. Evaluation goes further. It brings together the values of multiple stakeholder groups to reach evaluative conclusions from multiple perspectives – the so what. This gives voice, and more control, to communities. When done well, evaluation flattens hierarchies and shares power, to the benefit of all.

John Tamihere gave a keynote speech on the work that Te Pou Matakana and Te Whānau O Waipareira Trust have been doing with Social Ventures Australia to advance Māori-led impact measurement in whānau-centred services. I was a proud kiwi in the audience as John challenged us all to empower communities to design their own solutions and avoid top-down approaches that impose the values of the privileged few.

https://twitter.com/juliankingnz/status/790907056870096896

Another highlight from IMPCON was the keynote from Bernice Sanders Smoot of Saint Wall Street. One thing Bernice said that particularly resonated was: there are two kinds of programs: those that work and those that claim they work. This points to the need to collect good evidence to support our claims. In my view it also speaks to a more subtle point: evidence can be used in different ways. For example, there’s evaluation and then there’s marketing. One is a form of honest and open inquiry. The other aims to be persuasive and emphasizes potential value. Both are legitimate and important. Impact measurement can support both. But to advance social impact, we need to be clear about which is which.

Stay tuned for the action agenda –it’ll be out soon. The agenda will be advanced by cross-sector teams under the leadership of steward organisations including the Melbourne Graduate School of Education, Claremont Graduate University, and the Evaluation Centre at Western Michigan University. Many thanks to AEA President John Gargani, SVI Chief Executive Jeremy Nicholls, the Aspen Network of Development Entrepreneurs, and Rockefeller Foundation for making IMPCON 2016 possible. Thanks also to John Gargani and Adrian Field for reviewing drafts of this blog. Any errors or omissions belong to me.

Julian King is an Auckland-based consultant specialising in evaluation, value for money and impact measurement and is a valued member of Social Value Aotearoa and ANZEA. He is a member of the Kinnect Group and an Honorary Fellow at the University of Melbourne.